For most of the clients I've been to, scaling out a JMS messaging layer with ActiveMQ is a priority. There are a couple ways to achieve this, but without a doubt, creating benchmarks and analyzing an architecture on real hardware (or as my colleague Gary Tully says "asking the machine") is step one. But what opensource options do you have for creating a set of comprehensive benchmarks? If you have experience with some good ones, please let me know in the comments. The projects that I could think of:

- Apache Jmeter

- ActiveMQ perf plugin

- FuseSource JMSTester

- Hiram Chirino's jms-benchmark

While chatting with Gary about setting up test scenarios for ActiveMQ, he recalled there was a very interesting project that appeared dead sitting in the FuseSource Forge repo named JMSTester. He suggested I take a look at it. I did, and I was impressed at its current capabilities. It was created by a former FuseSource consultant, Andres Gies, through many iterations with clients, flights, and free-time hacking. I have since taken it over and I will be adding features, tests, docs, and continuing the momentum it once had.

But even before I can get my creative hands in there, I want to share with you the power it has at the moment.

Purpose

The purpose of this blog entry is to give a tutorial-like introduction to the JMSTester tool. The purpose of the tool is to provide a powerful benchmarking framework to create flexible, distributed JMS tests while monitoring/recording stats critical to have on-hand before making tweaks and tuning your JMS layer.

Some of the docs from the JMSTester homepage are slightly out of date, but the steps that describe some of the benchmarks are still accurate. This tutorial will require you download the SNAPSHOT I've been working on, which can be found here: jmstester-1.1-20120904.213157-5-bin.tar.gz. I will be deploying the next version of the website soon, which should have more updated versions of the binaries. When I do that, I'll update this post.

Meet the JMSTester tool

The JMSTester tool is simply a tool that sends and receives JMS messages. You use profiles defined in spring context config files to specify what sort of load you want to throw at your message broker. JMSTester allows you to define the number of producers you wish to use, the number of consumers, the connection factories, JMS properties (transactions, session acks,), etc. But the really cool part is you can run the benchmarks distributed over many machines. This means you setup machines to specifically act as producers and different ones to act as consumers. As far as monitoring and collecting the stats for benchmarking, JMSTester captures information in three different categories:

- Basic: message counts per consumer, message size

- JMX: monitor any JMX properties on the broker as the tests run, including number of threads, queue size, enqueue time, etc

- Machine: CPU, system memory, swap, file system metrics, network interface, route/connection tables, etc

They Hyperic SIGAR library is used to capture the machine-level stats (group 3) and the RRD4J library is used to log the stats and output graphs. At the moment, I believe the graphs are pretty basic and I hope to improve upon those, but the raw data is always dumped to a csv file and you can use your favorite spreadsheet software to create your own graphs.

Architecture

The JMSTester tool is made up the following concepts:

- Controller

- Clients

- Recorder

- Frontend

- Benchmark Configuration

Controller

The controller is the organizer for the benchmark. It keeps track of who's interested in benchmark commands, starts the tests, keeps track of the number of consumers, the number of producers, etc. The benchmark cannot run without a controller. For those of you interested, the underlying architecture of the JMSTester tool relies on messaging, and ActiveMQ is the broker that the controller starts up for the rest of the architecture to work.

Clients

Clients are containers that take commands and can emulate the role of Producer, Consumer or both or neither (this will make sense further down). You can have as many clients as you want. You give them unique names and use their names within your benchmark configuration files. The clients can run anywhere, including on separate machines or all on one machine.

Recorder

The clients individually record stats and send the data over to the recorder. The recorder ends up organizing the stats and assembling the graphs, RRD4J databases, and benchmark csv files.

Frontend

The frontend is what sends commands to the controller. Right now there is only a command-line front end, but my intentions include a web-based front end with a REST-based controller that can be used to run the benchmarks.

Benchmark Configuration

The configuration files are Spring context files that specify beans that instruct the controller and clients how to run the benchmark. In these config files, you can also specify what metrics to capture and while kind of message load to send to the JMS broker. Going forward I aim to improve these config files including adding custom namespace support to make the config less verbose.

Let's Go!

The JMSTester website has a couple of good introductory tutorials:

- Simple: http://jmstester.fusesource.org/documentation/manual/TutorialSimple.html

- JMX Probes: http://jmstester.fusesource.org/documentation/manual/TutorialProbes.html

- Distributed: http://jmstester.fusesource.org/documentation/manual/TutorialDistributed.html

They are mostly up-to-date, but I'll continue to update them as I find errors.

The only thing about the distributed tutorial, it doesn't actually set up a distributed example. It separates out the clients but only on the same localhost machine. There are just a couple other parameters that need to be set to distribute it, which we'll cover here.

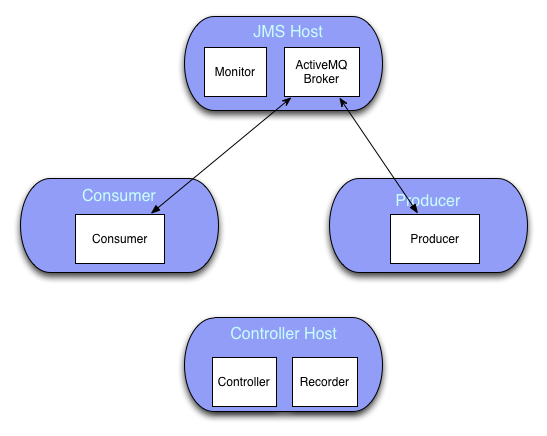

The architecture for the tutorial will be the following:

Let's understand the diagram really quickly.

The JMS Host will have two processes running: the ActiveMQ broker we'll be testing, and a JMSTester client container named Monitor. The container will be neither a producer or container, but instead will be used only to monitor machine and JMX statistics. The statistics will be sent back to the recorder on the Controller Host as described in the Recorder section above. The Producer and Consumer containers will be run on separate machines named, respectively, Producer and Consumer. Lastly, the Controller Host machine will have the Controller and Recorder components of the distributed test.

Initial Setup

Download and extract the JMSTester binaries on each machine that will be participating in the benchmark.

Starting the Controller and Recorder containers

On the machine that will host the controller, navigate to the $JMSTESTER_HOME dir and type the following command to start the controller and the recorder:

./bin/runBenchmark -controller -recorder -springConfigLocations conf/testScripts

Note that everything must be typed exactly as it is above, including no trailing spaces on the 'conf/testScripts'

This is a particularity that I will alleviate as part of my future enhancements.

Once you've started the controller and recorder, you should be ready to start up the rest of the clients. The controller starts up an embedded broker that the clients will end up connecting to.

Starting the Producer container

On the machine that will host the producer, navigate to the $JMSTESTER_HOME dir, and type the following command:

./bin/runBenchmark -clientNames Producer -hostname domU-12-31-39-16-41-05.compute-1.internal

For the -hostname parameter, you must specify the host name where you started the controller. I'm using Amazon EC2 above, and if you're doing the same, prefer to use the internal DNS name for the hosts.

Starting the Consumer container

For the consumer container, you'll be doing the same thing you did for the producer, except give it a client name of Consumer

./bin/runBenchmark -clientNames Consumer -hostname domU-12-31-39-16-41-05.compute-1.internal

Again, the -hostname parameter should reflect the host on which you're running the controller.

Setting up ActiveMQ and the Monitor on JMS Host

Setting up ActiveMQ is beyond the scope of this article.

But you will need to enable JMX on the broker. Just follow the instructions found on the Apache ActiveMQ website.

This next part is necessary to allow the machine-level probe/monitoring. You'll need to install the SIGAR libs. They are not distributed with JMSTester because of their license, and their JNI libs are not available in Maven. Basically all you need to do is download and extract the [SIGAR distro from here][sigar-distro] and copy all of the libs from the $SIGAR_HOME/sigar-bin/lib folder into your $JMSTESTER_HOME/lib folder.

Now start the Monitor container with a similar command for the producer and consumer:

./bin/runBenchmark -clientNames Monitor -hostname domU-12-31-39-16-41-05.compute-1.internal

Submitting the tutorial testcase

We can submit the testcase from any computer. I've chosen to do it from my local machine. You'll notice the machine from which you submit the testcase isn't reflected in the diagram from above; this is simply because we can do it from any machine. Just like the other commands, however, you'll still need the JMSTester binaries.

Before we run the test, let's take a quick look at the Spring config file that specifies the test. To do so, open up $JMSTESTER_HOME/conf/testScripts/tutorial/benchmark.xml in your favorite text editor, preferably one that color-codes XML documents so it's easier to read. The benchmark file is annotated with a lot of comments that describe the individual sections clearly. If something is not clear, please ping me so I can provide more details.

There are a couple places in the config where you'll want to specify your own values to make this a successful test. Unfortunately, this is a manual process at the moment, but I plan to fix that up.

Take a look at where the JMS broker connection factories are created. In this case, that would be where the ActiveMQ Connection Factories are created (lines 120 and 124.) The URL that goes here is the URL for the ActiveMQ broker you started in one of the previous sections. As it's distributed, there is a EC2 host url in there. You must specify your own host. Again, if you use EC2, prefer the internal DNS names.

Then, take a look at line 169 where the AMQDestinationProbe is specified. This probe is JMX-probe specific to ActiveMQ. You must change the brokerName property to match whatever you named your broker when you started it (usually found in the <broker brokerName="name here"> section of your broker config).

Finally, from the $JMSTESTER_HOME dir, run the following command:

./bin/runCommand -command submit:conf/testScripts/tutorial -hostname ec2-107-21-69-197.compute-1.amazonaws.com

Again, note that I'm setting the -hostname parameter to the host that the controller is running on. In this case, we'll prefer the public DNS of EC2, but it would be whatever you have in your environment.

Output

There you have it. You've submitted the testcase to the benchmark frammework. You should see some activity on each on of the clients (producer, consumer, monitor) as well as on the controller. If your test has run correctly and all of the raw data and graphs have been produced, you should see something similar as logging output:

Written probe Values to : /home/ec2-user/dev/jmstester-1.1-SNAPSHOT/tutorialBenchmark/benchmark.csv

Note that all of the results are written to tutorialBenchmark which is the name of the test as defined by the benchmarkId in the Spring config file on line 18:

<property name="benchmarkId" value="tutorialBenchmark"/>

If you take a look at the benchmark.csv file, you'll see all of the stats that were collected. The stats for this tutorial that were collected include the following:

- message count

- message size

- JMX QueueSize

- JMX ThreadCount

- SIGAR CpuMonitor

- SIGAR Free System Memory

- SIGAR Total System Memory

- SIGAR Free Swap

- SIGAR Total Swap

- SIGAR Swap Page In

- SIGAR Swap Page Out

- SIGAR Disk Reads (in bytes)

- SIGAR Disk Write (in bytes)

- SIGAR Disk Reads

- SGIAR Disk Writes

- SIGAR Network RX BYTES

- SIGAR Network RX PACKETS

- SIGAR Network TX BYTES

- SIGAR Network RX DROPPED

- SiGAR Network TX DROPPED

- SIGAR Network RX ERRORS

- SIGAR Network TX ERRORS

That's it

I highly recommend taking a look at this project. I haven taken it over, and will be improving it as time permits, but I would very much value any thoughts or suggestions about how to improve it or what use cases to support. Take a look at the documentation already there, and I will be adding more as we go.

If you have questions, or something didn't work properly as described above, please shoot me a comment, email or find me in the Apache IRC channels... I'm usually in at least #activemq and #camel.